G-VOILA

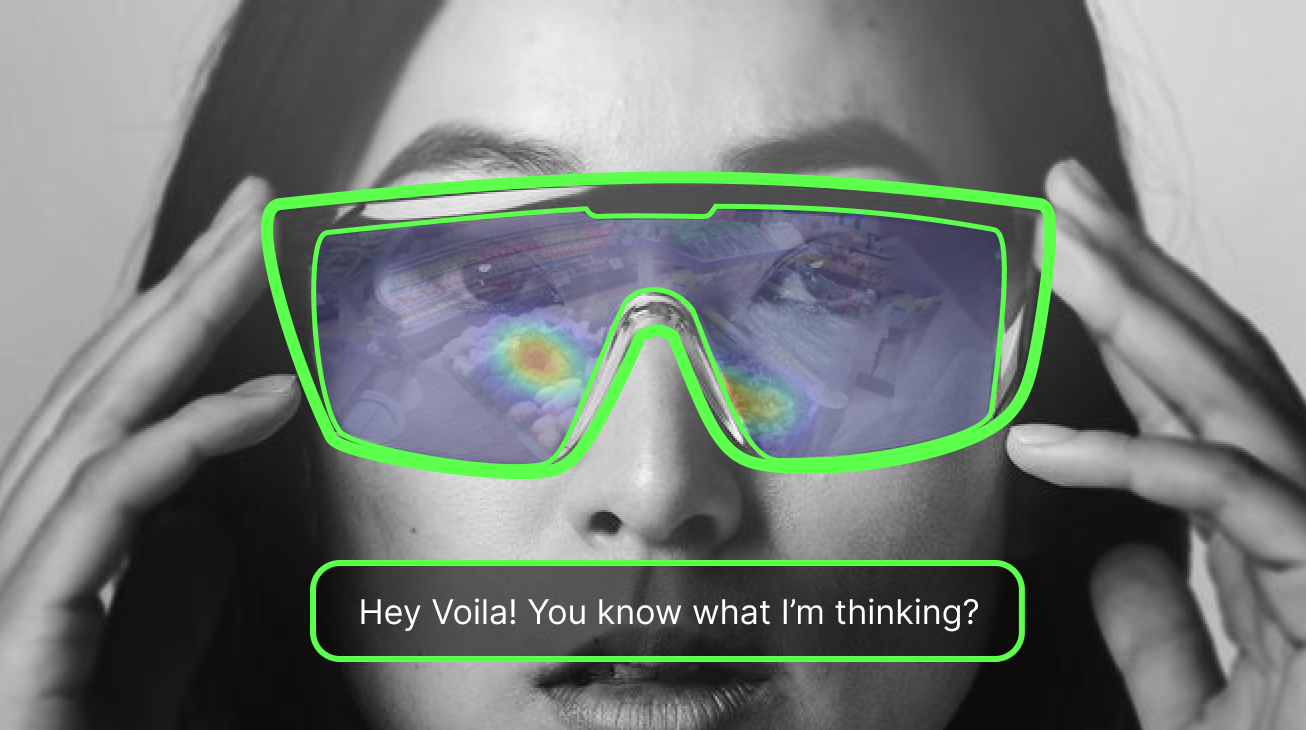

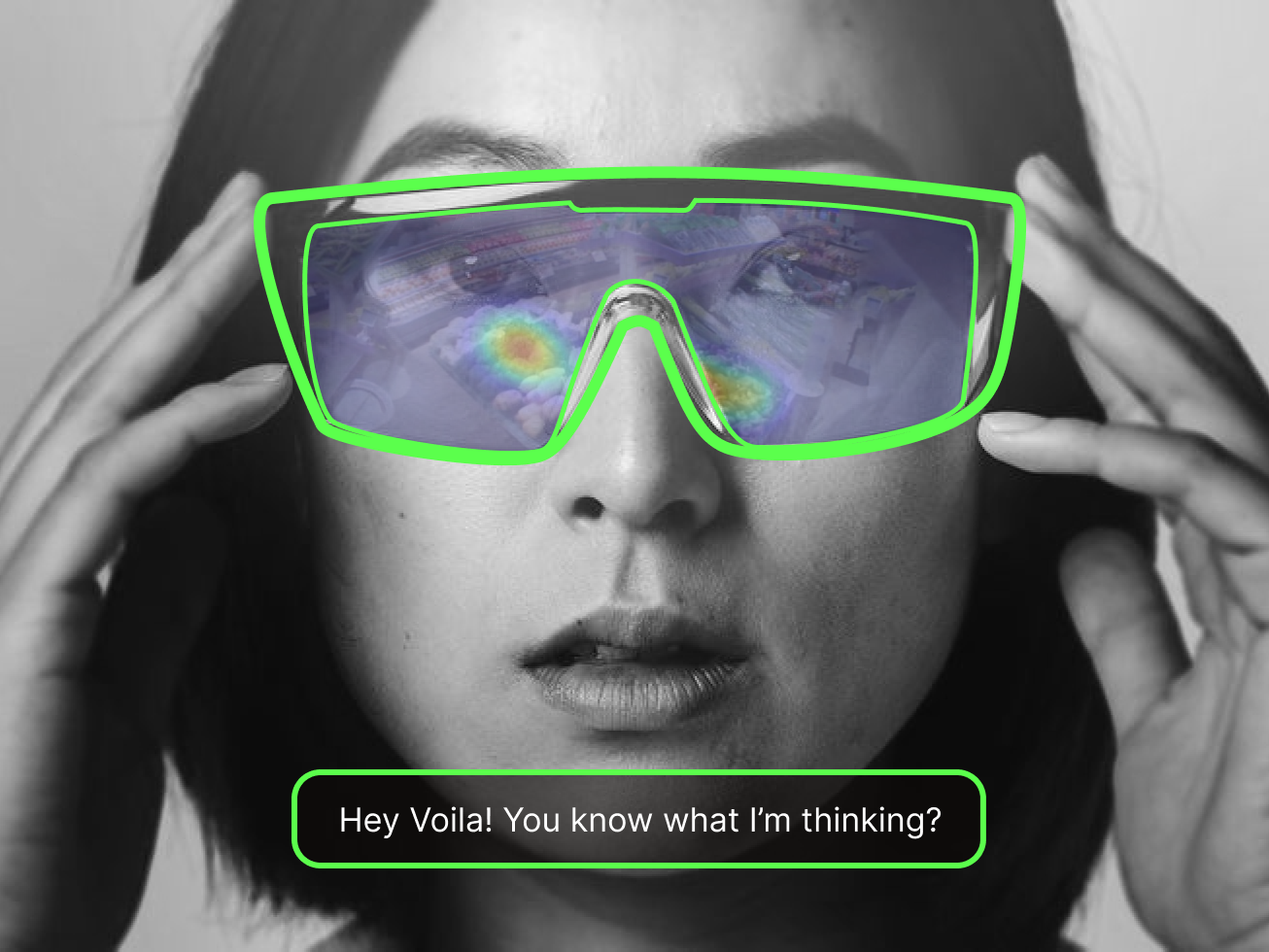

"Can AI understand us better if eye data is shared?"

Overview

“Can AI understand us better if eye data is shared?”

The information conveyed by eye movements is very rich in content. Within the movements and patterns of gaze, there lies information about preferences, intentions, as well as incomplete contextual information.

As developers of general artificial intelligence increasingly shift their focus towards incorporating more modalities of information such as images and sounds, we can’t help but wonder: If we provide such rich eye movement information to artificial intelligence, could it enable them to better understand us?

Role

2nd author (of HCI research paper)

Duration

2023 June – 2023 Sept. (4 months)

Director

Yuntao Wang, Tsinghua PI HCI Lab

Category

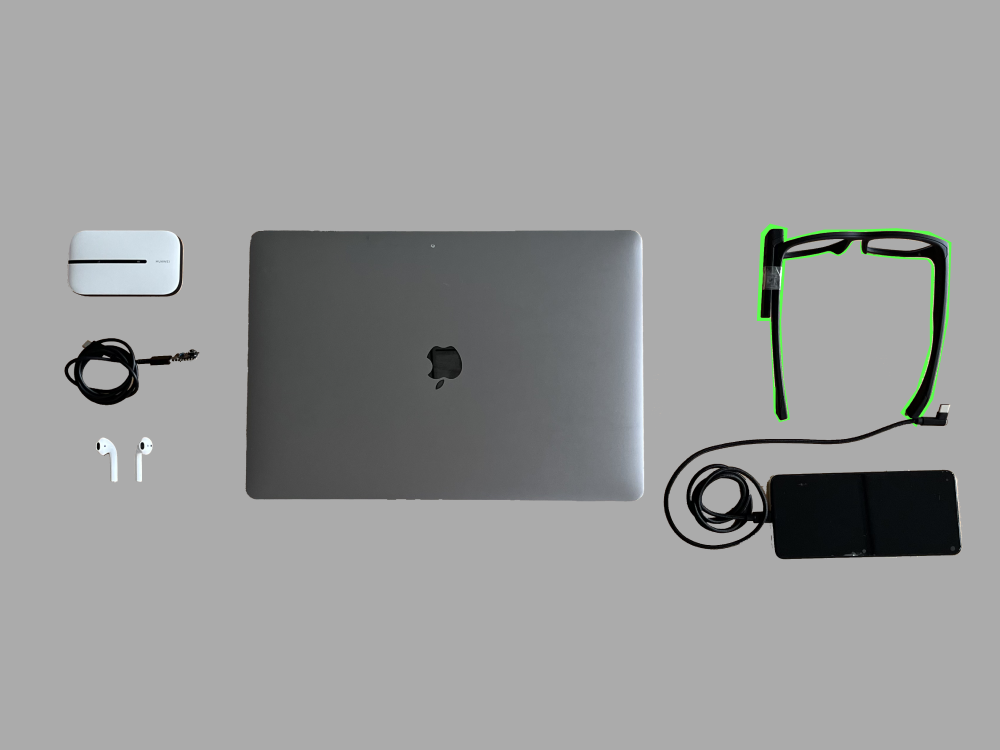

Smart glasses, Large Language Models, Gaze Tracking

Current Status

Submitted, IMWUT 24′

Abstract

The boundaries of information access have been radically redefined in today’s ubiquitous computing era. No longer confined to traditional mediums or specific locations, information retrieval (IR) has transcended physical limitations, allowing individuals to tap into vast information anywhere and anytime. In this paper, we present G-VOILA, a technique that integrates gaze and voice inputs to streamline information querying in everyday contexts on smart glasses. We conducted a user study to delve into the inherent ways users formulate queries with G-VOILA. By harnessing advanced computer vision techniques and large language models (LLMs) to decode intricate gaze and voice patterns, G-VOILA reveals the user’s unspoken interests, providing a semantic understanding of their query intent to deliver apt responses. In a follow-up study involving 16 participants across two real-life scenarios, our method showcased an 89% query intent recall. Additionally, users indicated strong satisfaction with the responses, a high matching score for query intent, and a pronounced preference for usage. These results validate the efficacy of G-VOILA for natural information retrieval in daily scenarios.

Research Gap

As the cyber and physical spaces quickly merge, people exhibit a significant demand for information retrieval (IR) anywhere at any time in their daily lives.

Traditional text-based querying methods, such as search engines or chatbots, are often restricted to specific devices and rigid input modalities, which can sometimes cause difficulties in expressing queries and interrupt user’s current workflow.

Behavioral Investigation

To gain insights into users’ natural expression patterns and potential engagement with G-Voila, we conducted a formative study in three daily scenarios.

Our findings indicate that users tend to omit specific context-related details in their expressions, assuming that G-Voila (AI assistant) can inherently comprehend such information through its sensing capabilities. We categorized users’ queries according to different levels of ambiguity; for further details, please refer to our paper.

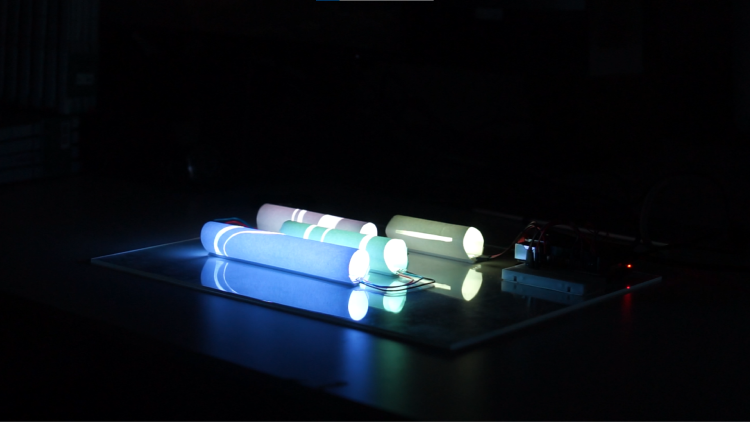

Methods

Utilizing the quantitative and qualitative analysis results obtained from the formative study, along with cutting-edge multi-modal large models and large language models, we proposed and implemented a six-stage pipeline for understanding query intent and answering questions effectively

User Evaluations

To evaluate G-Voila, we conducted a controlled user study in two daily life settings, obtaining an objective intent recall score of 0.89 and a precision score of 0.84. Additionally, G-Voila demonstrated a significantly higher subjective preference compared to our no-gaze baseline.